MatAnyone Github Guide

Installation

Clone Repository

To begin, clone the MatAnyone repository and navigate into the project directory:

git clone https://github.com/pq-yang/MatAnyone

cd MatAnyoneImage Preview

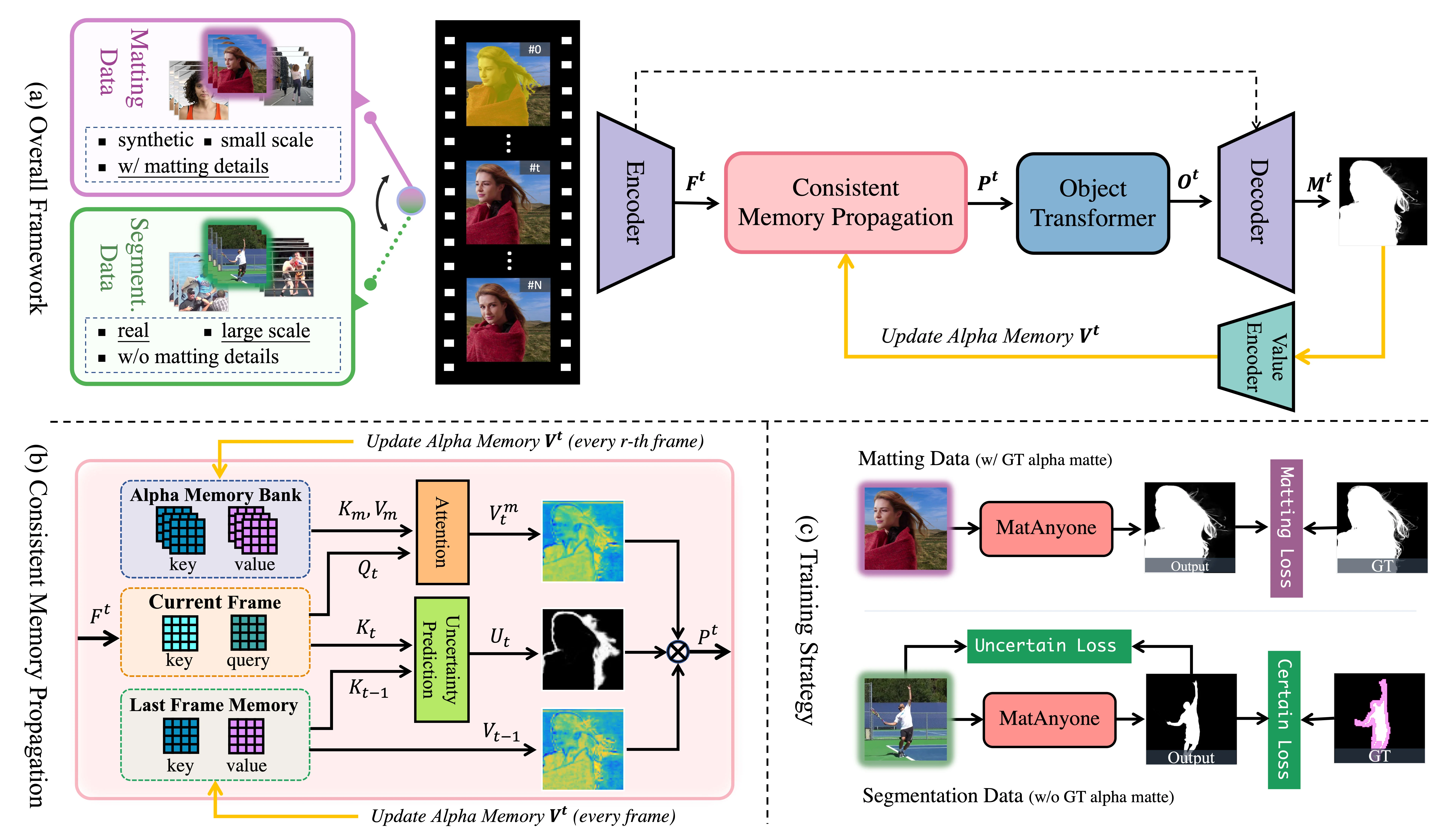

For more information, visit the official source: MatAnyone Official Source (pq-yang.github.io/projects/MatAnyone/)

Create Conda Environment and Install Dependencies

Create a new Conda environment and install dependencies:

create new conda env

conda create -n matanyone python=3.8 -y

conda activate matanyone

install python dependencies

pip install -e .

[optional] install dependencies for Gradio demo

pip3 install -r hugging_face/requirements.txtInference

Download Model

Download the pretrained model from MatAnyone v1.0.0 to the pretrained_models folder. The pretrained model will also be automatically downloaded during the first inference if not manually added.

Your directory structure should look like this:

pretrained_models

|- matanyone.pthQuick Test

We provide some example inputs in the inputs folder. Each run requires a video and its first-frame segmentation mask. Segmentation masks can be obtained from interactive segmentation models such as SAM2 demo.

Example Directory Structure:

inputs

|- video

|- test-sample0 folder containing all frames

|- test-sample1.mp4 .mp4, .mov, .avi

|- mask

|- test-sample0_1.png mask for person 1

|- test-sample0_2.png mask for person 2

|- test-sample1.pngRun Inference

Run the following commands to test MatAnyone:

Single Target

short video; 720p

python inference_matanyone.py -i inputs/video/test-sample1.mp4 -m inputs/mask/test-sample1.png

short video; 1080p

python inference_matanyone.py -i inputs/video/test-sample2.mp4 -m inputs/mask/test-sample2.png

long video; 1080p

python inference_matanyone.py -i inputs/video/test-sample3.mp4 -m inputs/mask/test-sample3.pngMultiple Targets (Using Mask Control)

obtain matte for target 1

python inference_matanyone.py -i inputs/video/test-sample0 -m inputs/mask/test-sample0_1.png --suffix target1

obtain matte for target 2

python inference_matanyone.py -i inputs/video/test-sample0 -m inputs/mask/test-sample0_2.png --suffix target2Additional Options:

- To save results as per-frame images, use

--save_image - To limit maximum input resolution, use

--max_size. Ifmin(w, h)exceeds this limit, the video will be downsampled. The default setting has no limit.

Interactive Demo

MatAnyone provides an interactive Gradio demo to simplify segmentation mask preparation. You can launch the demo locally to test videos or images interactively.

Steps to Launch the Demo:

cd hugging_face

install dependencies (FFmpeg required)

pip3 install -r requirements.txt

launch the demo

python app.pyOnce launched, an interactive interface will appear where you can:

- Upload your video/image.

- Assign target masks with a few clicks.

- Get matting results instantly.

This guide provides a step-by-step setup for MatAnyone, covering installation, inference, and interactive demo usage.